Azure Data Factory and Logic Apps for Dynamics 365 Finance and Operations

Hello the Community, this new blog post is for you if you are wondering how we can leverage Azure Integration Components on top of your ERP : Dynamics 365 Finance and Operations. How, when, and why can we use such kind of components to integrate large & low volume of DATA. I will also explain the basic knowledge required to go further especially for Azure Data Factory as an ETL component before integration. What are also the benefits compare to the Data Management Framework or DataFlow of Dynamics 365 ? Also which one to use : Logic Apps or Azure Data Factory ? Yes so much questions here ;)

Like always, I will do at the end of this article a quick DEMO on How I Can use Azure Integrations Services on top of Dynamics 365 F&O.

Azure Data Factory is part of the Integration Services.

Azure Data Factory (ADF) is essential service in all data related activities in Azure. It is flexible and powerful Platform as a Service offering with multitude of connectors and integration capabilities. It is a heart of ETL in Azure. This tool can support large volume of DATA ! I often propose and use it in such Dynamics 365 implementations as a core service with Data migration or during the lifecycle of the project itself.

In the world of big data, raw, unorganized data is often stored in relational, non-relational, and other storage systems. However, on its own, raw data doesn't have the proper context or meaning to provide meaningful insights to analysts, data scientists, or business decision makers.

Big data requires service that can orchestrate and operationalize processes to refine these enormous stores of raw data into actionable business insights. Azure Data Factory is a managed cloud service that's built for these complex hybrid extract-transform-load (ETL), extract-load-transform (ELT), and data integration projects.

ADF is always the good place also for such Hybrid/OnPremise scenarios where you can setup multiple data sources with such kind of data structures / connectors : including my famous one the Azure Data Lake Storage : yes again you will see here that I will always use it for integration pattern architecture.

Source : Microsoft Documentation

I would also always suggest to go through Adam Marczak Youtube Channel, where he always do such kind of great tutorials for AZURE. Especially here a good introduction for Azure Data Factory :

In a way, ADF is also the good product that make easier to build SQL Server Integration Services (SSIS) pipeline. In fact, you could also do both in 1 Data Pipeline for Dynamics 365 projects.

Source : Microsoft Documentation

On the other hand, you could use see that we don’t have Azure Data Factory for integration services, but also Azure Logic Apps !

Logic Apps is very similar than Power Automate ; but for me he has also more flexibility on ALM Process such as the deployment part and Azure Dev Ops feature : including version control and CI/CD : that’s why for integration process I would always go through Logic Apps than Power Automate. But you can also export any flow from Power Automate you already done to put it on Logic Apps template.

Meaning, you need to be careful also for the Governance side, and Logic Apps is more accurate to be use for small volume - transactionnal process with few basic transformations. (like Power Automate does in fact)

Logic Apps is more powerful in terms of connectors than ADF : (450 versus 90 for ADF)

To summarize Azure Logic Apps is a cloud service that helps you schedule, automate, and orchestrate tasks, business processes, and workflows when you need to integrate apps, data, systems, and services across enterprises or organizations. Logic Apps simplifies how you design and build scalable solutions for app integration, data integration, system integration, enterprise application integration (EAI), and B2B communication, whether in the cloud, on premises, or both.

All ADF or Logic Apps can be added to an ALM process with GIT Version control on Azure Dev Ops !

Again a great video to see as an Introduction Logic Apps in actions !

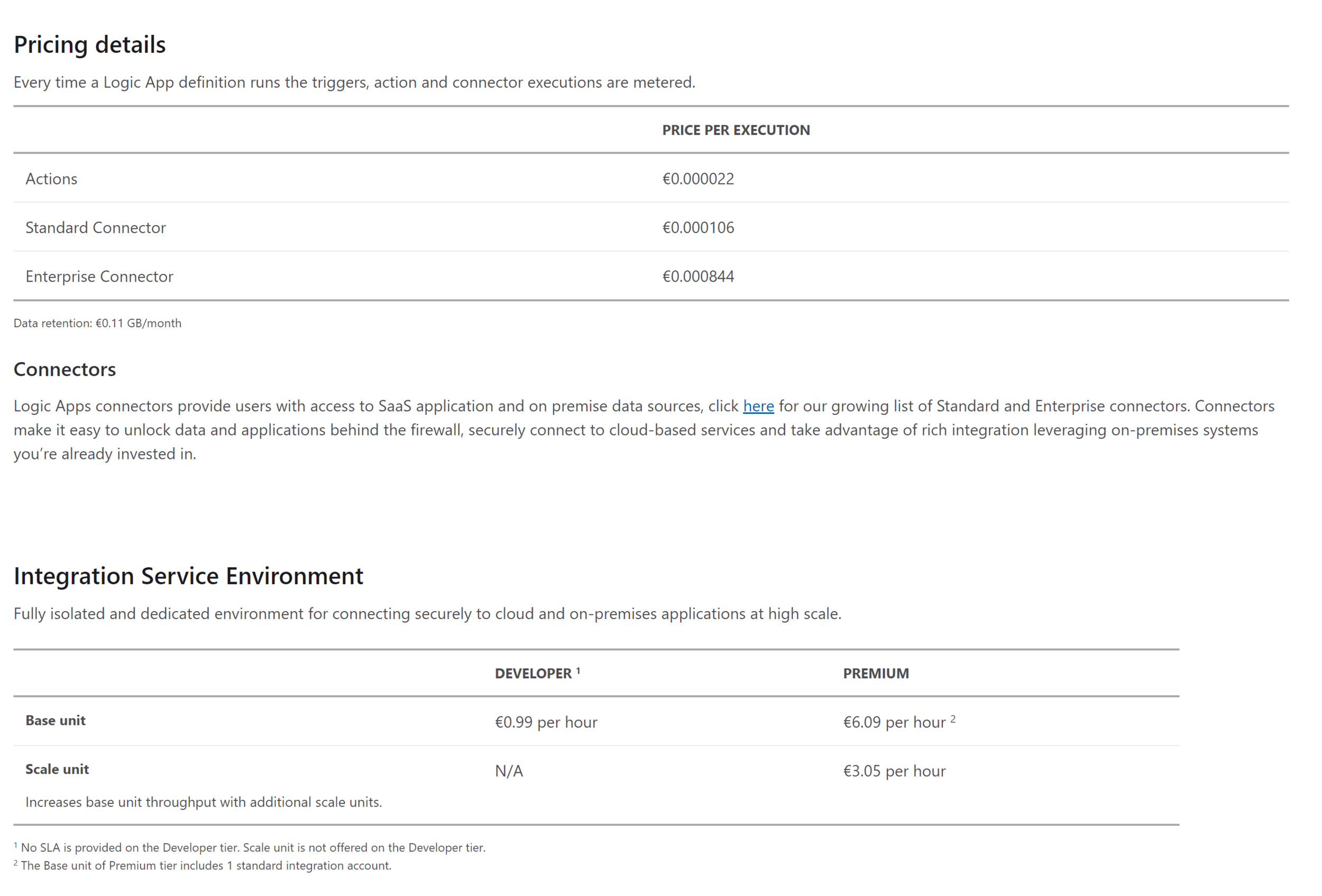

Yes before, going on the Dyamics 365 F&O side only, it’s always good to see th Pricing :)

For Logic Apps the price can be see very quickly and easier and very cheap. But very be careful, for me as a best practice you should go directly to the Integration Service environment dedicated for you. Otherwise, you will be in a shared global pool of Logic Apps. In term of response time and since Logic Apps is more attending for Synchronous fast flow, the price can go up very quickly - as well as if you have multiple actions and connectors (premium one) in multiple flows.

Source : Azure Price list as of October 2020

The Azure Data Factory price list is much complex than Logic Apps. Yes… but it also very cheap especially if you need those data pipelines only in Data Migration project ! You pay only when you use it (like a lot of Azure components yes…) but it’s more 1 global RUN each night as a batch to extract, transform and load billions of rows DATA coming from different sources.

Like Logic Apps, you could deploy your OWN Self-Hosted Integration Runtime, and not a shared one with multiple customers. Of course, a good best practice in production data pipeline. Considering also to have multiple Virtual Machines in Scale-set and High Availability.

Source Azure Price list : As of October 2020

For the rest, you will find all needed in my Youtube Video in 4K ! I think it will be more digest to see in actions whereas to put you 100 screenshots !

You can see (in English) :

Pattern designs for Dynamics 365 F&O with Azure Data Factory & Logic Apps !

Compare to DMF (Data Management Framework of D365 F&O), OData API, Batch Data API

Basic part of Logic Apps with quick example - and ALM stuff with Dynamics 365 F&O

Long part for Azure Data Factory for D365 F&O with : ALM, Git (DevOps) - Integration Services (hybrid for OnPrem data) - Cross Data with other Sources - transform DATA with DataFlow and Load it to D365 F&O

See 2 design patterns : Push Data and/or Extract Data

I will also share the ability to use Azure Data Lake component again !!! Like all my previous articles… :)

Conclusion (which one to use, when, why, how to learn etc…)